Workshop 2025

Official ACM Multimedia 2025 Workshop – October

28, 2025 (in person)

The GENEA (Generation and Evaluation of Non-verbal Behaviour for Embodied Agents) Workshop 2025 aims to

bring together

researchers from diverse disciplines working on different aspects of non-verbal behaviour generation,

facilitating

discussions on advancing both generation techniques and evaluation methodologies.

We invite contributions from fields such as human-computer interaction, machine learning, multimedia,

robotics, computer

graphics, and social sciences. This is the sixth installment of the GENEA Workshop,

to learn more about the GENEA Initiative, please go here.

The workshop proceedings can be found here.

Planned Workshop programme

ALL TIMES IN DUBLIN' LOCAL TIMEZONE (UTC+1)

- Evaluating Automatic Hand-Gesture Generation Using Multimodal Corpus Annotations: The Benefits of a Multidisciplinary Approach (Mickaëlla Grondin Verdon et al.)

- SARGes: Semantically Aligned Reliable Gesture Generation via Intent Chain (Nan Gao et al.)

- From Embeddings to Language Models: A Comparative Analysis of Feature Extractors for Text-Only and Multimodal Gesture Generation (Johsac Isbac Gomez Sanchez et al.)

- SemGest: A Multimodal Feature Space Alignment and Fusion Framework for Semantic-aware Co-speech Gesture Generation (Yo-Hsin Fang et al.)

- Catherine Pelachaud, Asli Ozyurek, Rachel McDonnell, and Shalini De Mello.

Important dates

Submission Deadlines: All deadlines are set at the end of the day, Anywhere on Earth (AoE)

Call for papers

GENEA 2025 is the sixth GENEA Workshop and an official workshop of ACM MM ’25, which will take place in Dublin, Ireland. Accepted paper submissions will be included in dedicated proceedings by the ACM.

Generating non-verbal behaviours, such as gesticulation, facial expressions and gaze, is of great importance for natural interaction with embodied agents such as virtual agents and social robots. At present, behaviour generation is typically powered by rule-based systems, data-driven approaches like generative AI, or their hybrids. For evaluation, both objective and subjective methods exist, but their application and validity are frequently a point of contention.

The aim of the GENEA Workshop is to bring together researchers working on the generation and evaluation of non-verbal behaviours for embodied agents. The goal is to

- facilitate knowledge transfer and discussion across different communities and research fields;

- promote data, resources, evaluation, and reproducibility, also evolving best practices in these areas; and

- provide an inclusive environment where new and established researchers can learn from each other.

To kickstart this process, we invite all interested researchers to submit a paper or a poster for presentation at the workshop, and to attend the event.

Paper topics include (but are not limited to) the following

- Automated synthesis of facial expressions, gestures, and gaze movements, including multi-modal synthesis

- Audio-, music- and emotion-driven or stylistic non-verbal behaviour synthesis

- Closed-loop/end-to-end non-verbal behaviour generation (from perception to action)

- Non-verbal behaviour synthesis in two-party and group interactions

- Using LLMs/VLMs in the context of non-verbal behaviour synthesis

- New datasets, annotation methods, and analyses of existing datasets related to non-verbal behaviour

- Cross-cultural and multilingual influences on non-verbal behaviour generation

- Cognitive and affective models for non-verbal behaviour generation

- Social perception and attribution of synthesised non-verbal behaviour

- Ethical considerations and biases in non-verbal behaviour synthesis

- Subjective and objective evaluation methods for all of the above topics

Submission guidelines

We will accept long (8 pages) and short (4 pages) paper submissions, all in the same double-column ACM conference format as used by ACM MM . Pages containing only references do not count toward the page limit for any of the paper types. Submissions should be made in PDF format through OpenReview and formatted for double-blind review.

Submission site: https://openreview.net/group?id=acmmm.org/ACMMM/2025/Workshop/GENEA

To encourage authors to make their work reproducible and reward the effort that this requires, we have introduced the GENEA Workshop Reproducibility Award.

We will also host an open poster session for advertising your late-breaking results and already-published work to the community. No paper submission is needed to participate in the poster session, and these posters will not be part of any proceedings (non archival). Submission guidelines for the poster session will be available on the workshop website.

Call for posters

The GENEA Workshop at ACM Multimedia 2025 will host an open poster session for advertising your late-breaking results and recently-published work to the community. Only a poster submission is required, no paper submission is needed to participate in the poster session, and these posters will not be part of any proceedings (i.e., non-archival). However, poster presentation does require a valid registration with ACM Multimedia to attend the workshop, and is subject to space constraints.

Paper topics include (but are not limited to) the following

- Automated synthesis of facial expressions, gestures, and gaze movements, including multi-modal synthesis

- Audio-, music- and emotion-driven or stylistic non-verbal behaviour synthesis

- Closed-loop/end-to-end non-verbal behaviour generation (from perception to action)

- Non-verbal behaviour synthesis in two-party and group interactions

- Using LLMs/VLMs in the context of non-verbal behaviour synthesis

- New datasets, annotation methods, and analyses of existing datasets related to non-verbal behaviour

- Cross-cultural and multilingual influences on non-verbal behaviour generation

- Cognitive and affective models for non-verbal behaviour generation

- Social perception and attribution of synthesised non-verbal behaviour

- Ethical considerations and biases in non-verbal behaviour synthesis

- Subjective and objective evaluation methods for all of the above topics

Poster guidelines

- Poster format: 1-page poster (no larger than A0 size; portrait is recommended). There is no specific template. The poster can be designed as you want.

- How to submit: please submit your poster draft (in PDF format) and/or poster abstract here (https://forms.gle/TqeTZyP7TSpsXJTXA). We will acknowledge your submission within 24 hours. The submission deadline is 23:59, September 19, 2025 (Anywhere on Earth timezone). We will get back to you no later than October 1st to let you know if we are able to accommodate your poster at the event.

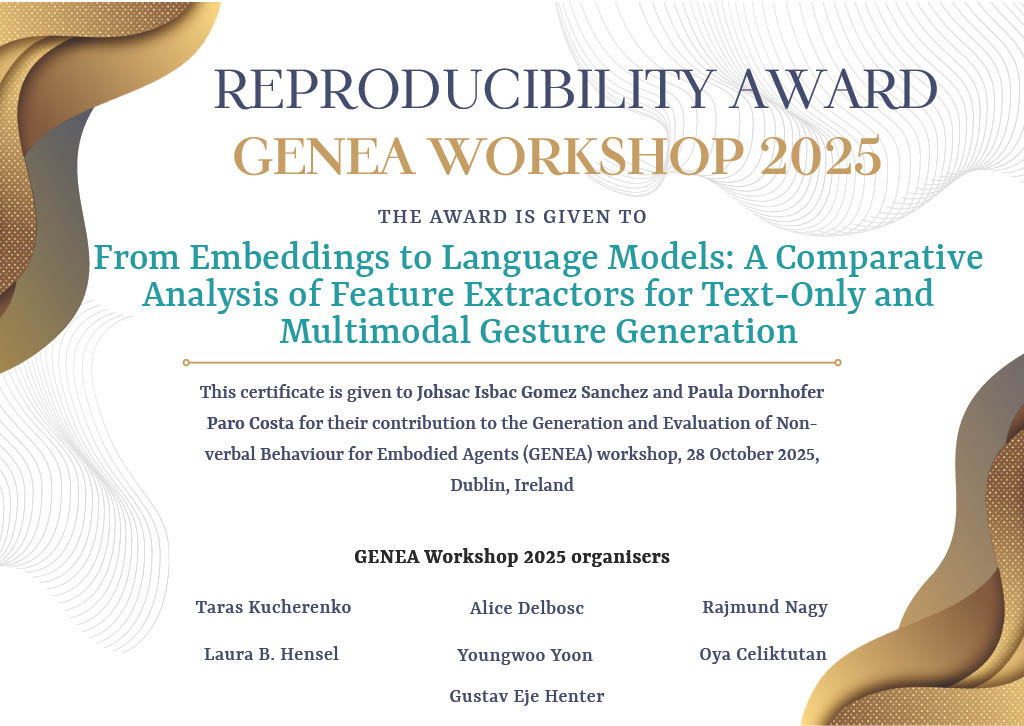

Reproducibility Award

Reproducibility is a cornerstone of the scientific method. Lack of reproducibility is a serious issue in contemporary research which we want to address at our workshop. To encourage authors to make their papers reproducible, and to reward the effort that reproducibility requires, we are introducing the GENEA Workshop Reproducibility Award. All short and long papers presented at the GENEA Workshop will be eligible for this award. Please note that it is the camera-ready version of the paper which will be evaluated for the reward.The award is awarded to the paper with the greatest degree of reproducibility. The assessment criteria include:

- ease of reproduction (ideal: just works, if there is code - it is well documented and we can run it)

- extent (ideal: all results can be verified)

- data accessibility (ideal: all data used is publicly available)

by Johsac Isbac Gomez Sanchez, Paula Dornhofer Paro Costa.

Invited speakers

Catherine Pelachaud

Biography

Catherine Pelachaud is a CNRS Director of Research in the laboratory ISIR, Sorbonne University. Her research interest includes socially interactive agent, nonverbal communication (face, gaze, gesture, and touch), and adaptive mechanisms in interaction. With her research team, she has been developing an interactive virtual agent platform, Greta, that can display emotional and communicative behaviors. She is co-editor of the ACM handbook on socially interactive agents (2021-22). She is the recipient of the ACM – SIGAI Autonomous Agents Research Award 2015, ICMI Sustained Accomplishment Award 2024, and was honored with the title Doctor Honoris Causa of the University of Geneva in 2016. Her Siggraph’94 paper received the Influential Paper Award of IFAAMAS (the International Foundation for Autonomous Agents and Multiagent Systems).Talk: Adapting Verbal and Nonverbal Behaviors of an SIA

During an interaction, participants exhibit a variety of adaptation mechanisms, which can take many forms, from signal imitation to synchronization and conversational strategies. The adaptation of the multimodal behaviours of a socially interactive agent to the behaviours of its human interlocutors has been demonstrated to promote engagement, build rapport and trust, and support the learning process. In this presentation, I will introduce computational approaches that have been developed to generate communicative and socio-emotional behaviours to convey intentions and affect. During the presentation, I will outline the experimental methods we have applied that have been conducted in order to validate these approaches. The objective and subjective measures will be introduced. The presentation will focus on Greta, an open-source system that facilitates the modelling of human-agent interactions.Asli Ozyurek

Biography

Prof. Dr. Asli Özyürek, since 2022, is the Scientific Director of Multimodal Language Department (with over 40 scientific members) at the Max Planck Institute for Psycholinguistics of the Max Planck Society. She is also a PI at Donders Institute for Brain Cognition and Behavior at Radboud University. Özyürek received a joint PhD in Linguistics and Psychology from the University of Chicago. She investigates the inherently and universal multimodal nature of human language capacity as one of its adaptive design features. To do so she studies how humans and machines produce and process gestures with spoken language and sign languages; how brain supports multimodal language; how typologically different spoken and signed languages pattern their structures given their multimodal diversity, and how cognition, learning constraints and communicative pressures of interaction shape multimodal language, its acquisition and evolution as an adaptive system. She uses a variety of methodologies such as behavioral and kinematic analyses of multimodal linguistic structures, eye tracking, machine learning, computational modeling, virtual reality and brain imaging to understand the complex multimodal nature of human language capacity and how it is recruited in different communicative settings. She has received many prestigious grants from NSF, NIH, Dutch Science Foundation (VIDI;VICI), ERC, EU Commision and Turkish Science Foundation and is an elected fellow of the Cognitive Society and Academia Europea.Talk: What insights can human cognition offer to enhance computational models of multimodal language generation and evaluation?

Most models used to generate semantically and contextually appropriate cospeech gesture generation use existing speech and gesture data sets as ground truth to train and generate novel gestures. These use mostly fusion models of motion kinematics of gestures with semantics of cooccurring speech. They are recently being elevated by labeling of the training gesture data by LLMs. While these methods achieve to generate somewhat semantically appropriate gestures this still remains a challenging task. Especially because in these models what is in gesture is mostly defined by what is in the contextual speech surrounding gesture and what can be labeled by categories of speech/text. In my talk I will offer types of knowledge gesture generation models should take into account from what we have learned from how humans produce and comprehend multimodal language and in interaction to inspire generative models.Recent advances in psycholinguistics have shown that gestures are partially driven by factors outside of the speech content they cooccur or can be described with such as 1) semantic categories of objects, actions and events they represent as well as how humans visually process them 2) the communicative intent they are produced with ( i.e., regarding their size and velocity) 3) the interlocutor’s speech and gestures (i.e, through alignment, feedback or other initiated repair) as well as their knowledge state (i.e., common ground). These point to the necessity to include information about these factors into the training data sets (e.g., by using interactive data sets and including labels about these interactive factors as well as data from the visual input to gestures ) to enhance the richness of the information models should take into account. Finally I will discuss how evaluation methods of new generative models can be enhanced by what we recently found out about human gesture understanding and (neuro) processing in context such as sensitivity to speech-gesture synchrony , ambiguity resolution, processing of iconicity, prediction and turn taking in multimodal contexts.

I will argue that incorporation of knowledge from human cognition and multimodal language processing into generative models will make not only virtual models more human like but also more interactive.

Accepted papers

SARGes: Semantically Aligned Reliable Gesture Generation via Intent Chain

Nan Gao, Yihua Bao, dongdong weng, Jiayi Zhao, Jia Li, Yan Zhou, Pengfei Wan

SemGest: A Multimodal Feature Space Alignment and Fusion Framework for Semantic-aware Co-speech Gesture Generation

Yo-Hsin Fang, Vijay John, Yasutomo Kawanishi

From Embeddings to Language Models: A Comparative Analysis of Feature Extractors for Text-Only and Multimodal Gesture Generation

Johsac Isbac Gomez Sanchez, Paula Dornhofer Paro Costa

Evaluating Automatic Hand-Gesture Generation Using Multimodal Corpus Annotations: The Benefits of a Multidisciplinary Approach

Mickaëlla Grondin Verdon, Domitille Caillat, Louis ABEL, Slim OuniOrganising committee

The main contact address of the workshop is: genea-contact@googlegroups.com.

Workshop organisers

Join our community

Join our Slack space dedicated to the gesture generation research community.

In order to ensure the security and integrity of our community, we kindly ask you to fill out

this form

to obtain the invitation link.

Join us to share your work, data, interesting papers, questions, ideas, job opportunities and more.

And keep up to date with the latest workshop news by following us on X.