Workshop 2023

★ Latest news ★

The GENEA Workshop will be held again at ICMI 2024 this year! Please visit this link for details.

Official ICMI 2023 Workshop – October 9 (in person)

The GENEA (Generation and Evaluation of Non-verbal Behaviour for Embodied Agents) Workshop

2023 aims at bringing together researchers that use different methods for non-verbal-behaviour generation

and evaluation, and hopes to stimulate the discussions on how to improve both the

generation methods and the evaluation of the results. We invite all interested researchers to submit a

paper related to their

work in the area and to participate in the workshop. This is the fourth installment of the GENEA Workshop,

for more information about the 2022 installment, please go here.

Important dates

Submission Deadlines: All deadlines are set at the end of the day, Anywhere on Earth (AoE)

Planned Workshop programme

ALL TIMES IN PARIS' LOCAL TIMEZONE (UTC+2)

- A Methodology for Evaluating Multimodal Referring Expression Generation for Embodied Virtual Agents (Alalyani et al.)

- Look What I Made It Do - The ModelIT Method for Manually Modeling Nonverbal Behavior of Socially (Reinwarth et al.)

- Listen, denoise, action! Audio-driven motion synthesis with diffusion models (Alexanderson et al.)

- Diff-TTSG: Denoising probabilistic integrated speech and gesture synthesis (Mehta et al.)

- Towards the generation of synchronized and believable non-verbal facial behaviors of a talking virtual agent (Delbosc et al.)

- MultiFacet: A Multi-Tasking Framework for Speech-to-Sign Language Generation (Kanakanti et al.)

Call for papers

GENEA 2023 is the fourth GENEA Workshop and an official workshop of ACM ICMI ’23, which will take place in Paris, France. Accepted paper submissions will be included in the adjunct ACM ICMI proceedings.

Generating non-verbal behaviours, such as gesticulation, facial expressions and gaze, is of great importance for natural interaction with embodied agents such as virtual agents and social robots. At present, behaviour generation is typically powered by rule-based systems, data-driven approaches, and their hybrids. For evaluation, both objective and subjective methods exist, but their application and validity are frequently a point of contention.

This workshop asks, “What will be the behaviour-generation methods of the future? And how can we evaluate these methods using meaningful objective and subjective metrics?” The aim of the workshop is to bring together researchers working on the generation and evaluation of non-verbal behaviours for embodied agents to discuss the future of this field. To kickstart these discussions, we invite all interested researchers to submit a paper for presentation at the workshop.

Paper topics include (but are not limited to) the following

- Automated synthesis of facial expressions, gestures, and gaze movements

- Audio- and music-driven nonverbal behaviour synthesis

- Closed-loop nonverbal behaviour generation (from perception to action)

- Nonverbal behaviour synthesis in two-party and group interactions

- Emotion-driven and stylistic nonverbal behaviour synthesis

- New datasets related to nonverbal behaviour

- Believable nonverbal behaviour synthesis using motion-capture and 4D scan data

- Multi-modal nonverbal behaviour synthesis

- Interactive/autonomous nonverbal behavior generation

- Subjective and objective evaluation methods for nonverbal behaviour synthesis

- Guidelines for nonverbal behaviours in human-agent interaction

We will accept long (8 pages) and short (4 pages) paper submissions, all in the same double-column ACM conference format as used by ICMI. Pages containing only references do not count toward the page limit for any of the paper types. Submissions should be formatted for double-blind review made in PDF format through OpenReview.

Submission site: https://openreview.net/group?id=ACM.org/ICMI/2023/Workshop/GENEA

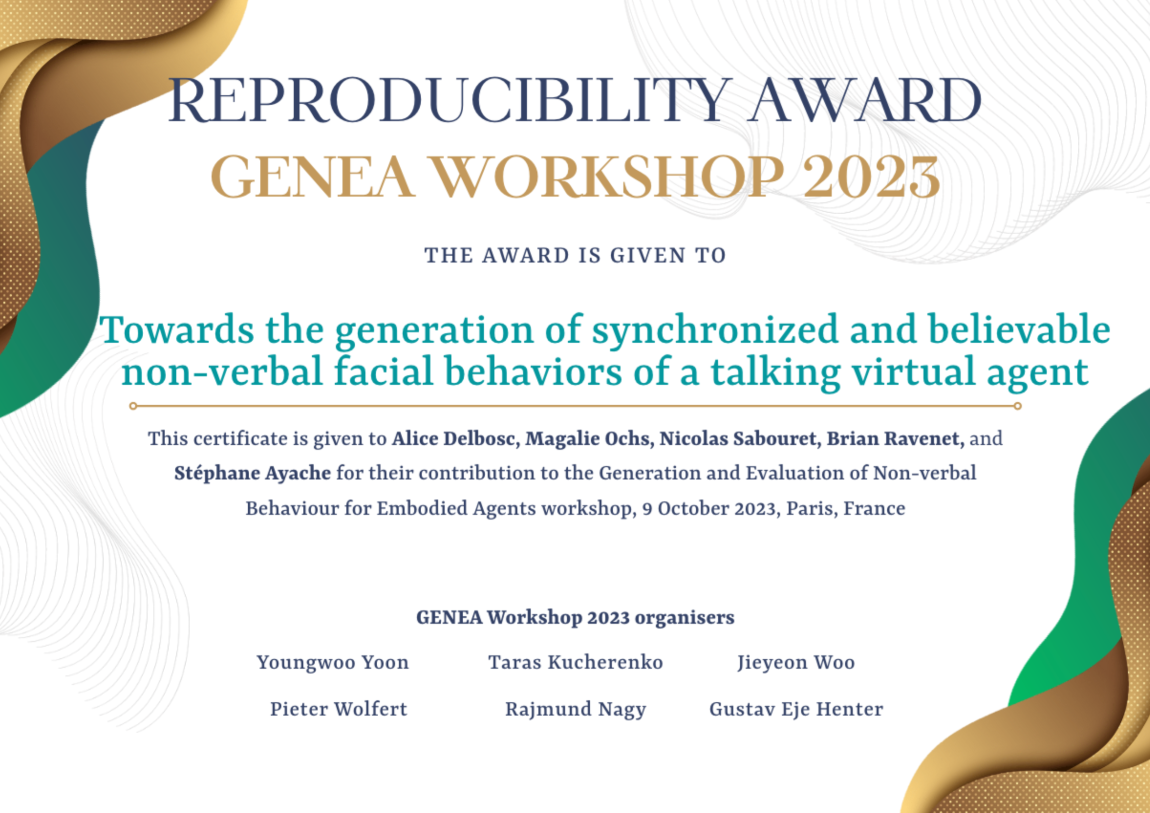

To encourage authors to make their work reproducible and reward the effort that this requires, we have introduced the GENEA Reproducibility Award.

We will also host an open poster session for advertising your late-breaking results and already-published work to the community. No paper submission is needed to participate in the poster session, and these posters will not be part of any proceedings (non archival). Submission guidelines can be found below.

Call for posters

The GENEA Workshop at ACM ICMI 2023 will host an open poster session for advertising your late-breaking results and recently-published work to the community. Only a poster submission is required, no paper submission is needed to participate in the poster session, and these posters will not be part of any proceedings (i.e., non-archival). However, poster presentation does require a valid registration with ICMI to attend the workshop, and is subject to space constraints.

Paper topics include (but are not limited to) the following

- Automated synthesis of facial expressions, gestures, and gaze movements

- Audio- and music-driven nonverbal behaviour synthesis

- Closed-loop nonverbal behaviour generation (from perception to action)

- Nonverbal behaviour synthesis in two-party and group interactions

- Emotion-driven and stylistic nonverbal behaviour synthesis

- New datasets related to nonverbal behaviour

- Believable nonverbal behaviour synthesis using motion-capture and 4D scan data

- Multi-modal nonverbal behaviour synthesis

- Interactive/autonomous nonverbal behavior generation

- Subjective and objective evaluation methods for nonverbal behaviour synthesis

- Guidelines for nonverbal behaviours in human-agent interaction

Poster guidelines

- Poster format: 1-page poster (no larger than A0 size; portrait is recommended). There is no specific template. The poster can be designed as you want.

- How to submit: Please submit your poster draft (in PDF format) here (https://forms.gle/4moUyfaKTcvrsUAaA). We will acknowledge your submission within 24 hours. The submission deadline is 23:59, August 18, 2023 (Anywhere on Earth timezone). We will get back to you no later than September 1 to let you know if we are able to accommodate your poster at the event.

Reproducibility Award

Reproducibility is a cornerstone of the scientific method. Lack of reproducibility is a serious issue in contemporary research which we want to address at our workshop. To encourage authors to make their papers reproducible, and to reward the effort that reproducibility requires, we are introducing the GENEA Workshop Reproducibility Award. All short and long papers presented at the GENEA Workshop will be eligible for this award. Please note that it is the camera-ready version of the paper which will be evaluated for the reward.The award is awarded to the paper with the greatest degree of reproducibility. The assessment criteria include:

- ease of reproduction (ideal: just works, if there is code - it is well documented and we can run it)

- extent (ideal: all results can be verified)

- data accessibility (ideal: all data used is publicly available)

by Alice Delbosc, Magalie Ochs, Nicolas Sabouret, Brian Ravenet, Stéphane Ayache.

Invited speakers

Rachel McDonnell

Biography

Rachel McDonnell is an Associate Professor in Creative Technologies at the School of Computer Science and Statistics at Trinity College Dublin. She is also a Fellow of Trinity College, a Principal Investigator in the ADAPT Research Centre, and a member of the Graphics, Vision, and Visualisation Group. She received her PhD in Computer Graphics in 2006 from TCD. Rachel McDonnell's research interests include computer graphics, character animation, virtual humans, VR, and perception. Her main focus is on real-time performance capture and perception of virtual humans.Talk - Metaverse Mayhem: Importance of the design in pervasive virtual agents

Recent developments in digital human technologies enable communication with intelligent agents in immersive virtual environments. In the future, it is likely that we will be conversing with virtual agents much more often for a wide range of interactions including customer support, education, healthcare, virtual shopping assistants, entertainment, language translation, financial services, and even potentially for therapy and counselling. Virtual agents have few constraints in terms of design, and can be programmed to take on a multitude of different appearance, motion and voice styles. In this talk, I will discuss research that I have conducted over the years on the perception of digital humans, with a focus on how appearance, motion, and voice are perceived. In particular, when characters are conversing and using gestures. I will also discuss the implications for agent-based interactions in the ‘Metaverse’, as technology develops, and some of the open questions in this topic of research.Sean Andrist

Biography

Sean Andrist is a senior researcher at Microsoft Research in Redmond, Washington. His research interests involve designing, building, and evaluating socially interactive technologies that are physically situated in the open world, particularly embodied virtual agents and robots. He is currently working on the Platform for Situated Intelligence project, an open-source framework designed to accelerate research and development on a broad class of multimodal, integrative-AI applications. He received his Ph.D. from the University of Wisconsin-Madison, where he primarily researched effective social gaze behaviors in human-robot and human-agent interaction.Talk - Situated Interaction with Socially Intelligent Systems: New Challenges and Tools

In this talk, I will introduce a research effort at Microsoft Research that we call “Situated Interaction,” in which we strive to design and develop intelligent technologies that can reason deeply about their surroundings and engage in fluid interaction with people in physically and socially situated settings. Our research group has developed a number of situated interactive systems for long-term in-the-wild deployment, including smart elevators, virtual agent receptionists, and directions-giving robots, and we have encountered a host of fascinating and unforeseen challenges along the way. I will close by presenting the Platform for Situated Intelligence (\psi), an open-source extensible platform meant to enable the fast development and study of this class of systems, which we hope will empower the research community to make faster progress on tackling the hard open challenges.Accepted papers

MultiFacet: A Multi-Tasking Framework for Speech-to-Sign Language Generation

Mounika Kanakanti, Shantanu Singh, Manish Shrivastava

Look What I Made It Do - The ModelIT Method for Manually Modeling Nonverbal Behavior of Socially Interactive Agents

Anna Lea Reinwarth, Tanja Schneeberger, Fabrizio Nunnari, Patrick Gebhard, Uwe Altmann, Janet Wessler

A Methodology for Evaluating Multimodal Referring Expression Generation for Embodied Virtual Agents

Nada Alalyani, Nikhil Krishnaswamy

Towards the generation of synchronized and believable non-verbal facial behaviors of a talking virtual agent

Alice Delbosc, Magalie Ochs, Nicolas Sabouret, Brian Ravenet, Stéphane AyacheOrganising committee

The main contact address of the workshop is: genea-contact@googlegroups.com.

Workshop organisers