Workshop 2022

Official ICMI 2022 Workshop – November 7 (Hybrid)

The GENEA (Generation and Evaluation of Non-verbal Behaviour for Embodied Agents) Workshop

2022 aims at bringing together researchers that use different methods for non-verbal-behaviour generation

and evaluation, and hopes to stimulate the discussions on how to improve both the

generation methods and the evaluation of the results. We invite all interested researchers to submit a paper related to their

work in the area and to participate in the workshop. This is the third installment of the GENEA Workshop,

to learn more about the GENEA Initiative, please go here.

Important dates

Planned Workshop programme

All times in India Standard Time

- Understanding Interviewees’ Perceptionsß and Behaviour towards Verbally and Non-verbally Expressive Virtual Interviewing Agents by Jinal Thakkar, Pooja S. B. Rao, Kumar Shubham, Vaibhav Jain, Dinesh Babu Jayagopi

- Emotional Respiration Speech Dataset by Rozemarijn Roes, Francisca Pessanha, Almila Akdag

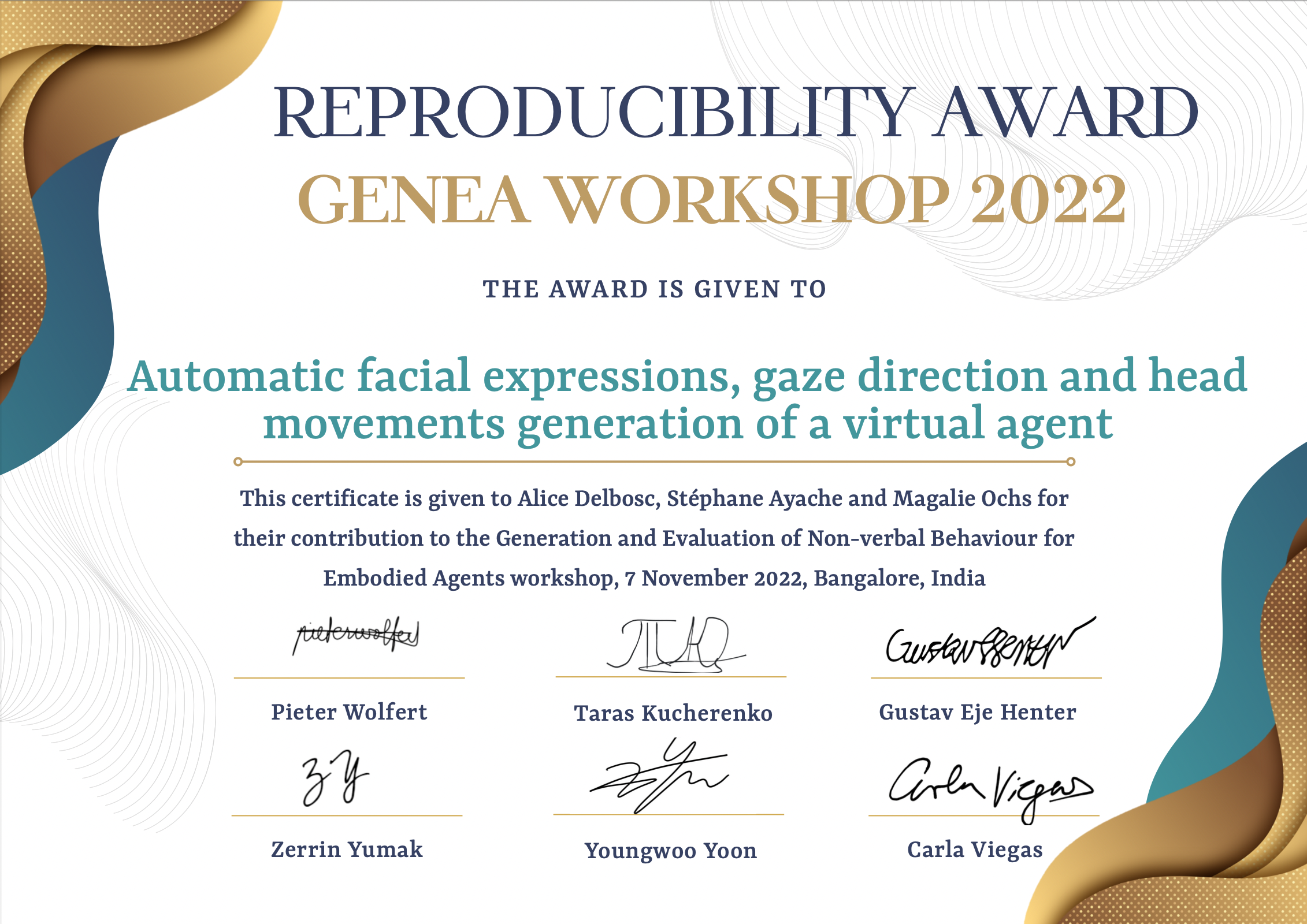

- Automatic facial expressions, gaze direction and head movements generation of a virtual agent by Alice Delbosc, Stéphane Ayache, Magalie Ochs

- Can you tell that I'm confused? An overhearer study for German backchannels by an embodied agent by Isabel Donya Meywirth, Jana Götze

Call for papers

GENEA 2022 is the third GENEA workshop and an official workshop of ACM ICMI’22, and will be hybrid: will take place both in Bangalore, India, and online. Accepted workshop submissions will be included in the adjunct ACM ICMI proceedings.

Generating non-verbal behaviours, such as gesticulation, facial expressions and gaze, is of great importance for natural interaction with embodied agents such as virtual agents and social robots. At present, behaviour generation is typically powered by rule-based systems, data-driven approaches, and their hybrids. For evaluation, both objective and subjective methods exist, but their application and validity are frequently a point of contention.

This workshop asks, “What will be the behaviour-generation methods of the future? And how can we evaluate these methods using meaningful objective and subjective metrics?” The aim of the workshop is to bring together researchers working on the generation and evaluation of non-verbal behaviours for embodied agents to discuss the future of this field. To kickstart these discussions, we invite all interested researchers to submit a paper for presentation at the workshop.

Paper topics include (but are not limited to) the following

- Automated synthesis of facial expressions, gestures, and gaze movements

- Audio- and music-driven nonverbal behaviour synthesis

- Closed-loop nonverbal behaviour generation (from perception to action)

- Nonverbal behaviour synthesis in two-party and group interactions

- Emotion-driven and stylistic nonverbal behaviour synthesis

- New datasets related to nonverbal behaviour

- Believable nonverbal behaviour synthesis using motion-capture and 4D scan data

- Multi-modal nonverbal behaviour synthesis

- Interactive/autonomous nonverbal behavior generation

- Subjective and objective evaluation methods for nonverbal behaviour synthesis

- Guidelines for nonverbal behaviours in human-agent interaction

Submission guidelines

Please format submissions for double-blind review according to the ACM conference format.

We will accept long (8 pages) and short (4 pages) paper submissions, all in the double-column ACM conference format. Pages containing only references do not count toward the page limit for any of the paper types. Submissions should be made in PDF format through OpenReview using this URL.

To encourage authors to make their work reproducible and reward the effort that this requires, we have introduced the GENEA Reproducibility Award.

Reproducibility Award

Reproducibility is a cornerstone of the scientific method. Lack of reproducibility is a serious issue in contemporary research which we want to address at our workshop. To encourage authors to make their papers reproducible, and to reward the effort that reproducibility requires, we are introducing the GENEA Workshop Reproducibility Award. All short and long papers presented at the GENEA Workshop will be eligible for this award. Please note that it is the camera-ready version of the paper which will be evaluated for the reward.The award is awarded to the paper with the greatest degree of reproducibility. The assessment criteria include:

- ease of reproduction (ideal: just works, if there is code - it is well documented and we can run it)

- extent (ideal: all results can be verified)

- data accessibility (ideal: all data used is publicly available)

by Alice Delbosc, Stéphane Ayache, Magalie Ochs.

Invited speakers

Carlos T. Ishi (RIKEN and ATR)

Biography

Carlos T. Ishi received the PhD degree in engineering from The University of Tokyo, Japan. He joined ATR Intelligent Robotics and Communication Labs in 2005, and became the group leader of the Dept. of Sound Environment Intelligence since 2013. He joined the Guardian Robot Project, RIKEN, in 2020. His research topics include analysis and processing of dialogue speech and non-verbal behaviors applied for human-robot interaction.Talk - Analysis and generation of speech-related motions, and evaluation in humanoid robots

The generation of motions coordinated with speech utterances is important for dialogue robots or avatars, in both autonomous and tele-operated systems, to express humanlikeness and tele-presence. For that purpose, we have been studying on the relationships between speech and motion, and methods to generate motions from speech, for example, lip motion from formants, head motion from dialogue functions, facial and upper body motions coordinated with vocalized emotional expressions (such as laughter and surprise), hand gestures from linguistic and prosodic information, and gaze behaviors from dialogue states. In this talk, I will give an overview of our research activities on motion analysis and generation, and evaluation of speech-driven motions generated in several humanoid robots (such as the android ERICA, and a desktop robot CommU).Judith Holler (Radboud University)

Biography

Judith is Associate prof and PI at the Donders Institute for Brain, Cognition, & Behaviour (Radboud University) and leader of the research group Communication in Social Interaction at the Max Planck Institute for Psycholinguistics. Judith has been a Marie Curie Fellow and currently holds an ERC Consolidator grant. The focus of her work is on the interplay of speech and visual bodily signals from the hands, head, face, and eye gaze, in communicating meaning in interaction. In her scientific approach, she combines analyses of natural language corpora with experimental testing, and methods from a wide range of fields, including gesture studies, linguistics, psycholinguistics, and neuroscience. In her most recent projects, she combines these methods also with cutting-edge tools and techniques, such as virtual reality, mobile eyetracking, and dual-EEG to further our insights into multimodal communication and coordination in social interaction.Talk - Towards generating artificial agents with multimodal pragmatic behaviour

Traditionally, visual bodily movements have been associated with the communication of affect and emotion. In this talk, I will throw light on the pragmatic contribution that visual bodily movements make in conversation. In doing so, I will focus on fundamental processes that are key in achieving mutual understanding in talk: producing recipient-designed messages, signalling understanding, trouble in understanding and repairing problems in understanding, and the communication of social actions (or “speech” acts). The findings I present highlight the need for equipping artificial agents with multimodal pragmatic capacities, and some ways which may be a fruitful starting point for doing so.Accepted papers

Understanding Interviewees’ Perceptions and Behaviour towards Verbally and Non-verbally Expressive Virtual Interviewing Agents

Jinal Thakkar, Pooja S. B. Rao, Kumar Shubham, Vaibhav Jain, Dinesh Babu Jayagopi

Emotional Respiration Speech Dataset

Rozemarijn Roes, Francisca Pessanha, Almila AkdagAutomatic facial expressions, gaze direction and head movements generation of a virtual agent

Alice Delbosc, Stéphane Ayache, Magalie OchsCan you tell that I'm confused? An overhearer study for German backchannels by an embodied agent

Isabel Donya Meywirth, Jana GötzeOrganising committee

The main contact address of the workshop is: genea-contact@googlegroups.com.

Workshop organisers

Program committee

- Chaitanya Ahuja (Carnegie Mellon University, United States of America)

- Sean Andrist (Microsoft Research, United States of America)

- Jonas Beskow (KTH Royal Institute of Technology, Sweden)

- Uttaran Bhattacharya (University of Maryland, United States of America)

- Carlos Busso (University of Texas at Dallas, United States of America)

- Yingruo Fan (The University of Hong Kong, Hong Kong)

- David Greenwood (University of East Anglia, England)

- Dai Hasegawa (Hokkai Gakuen University, Japan)

- Carlos Ishi (Advanced Telecommunications Research Institute International, Japan)

- Minsu Jang (Electronics and Telecommunications Research Institute, South Korea)

- James Kennedy (Futronics NA Corporation, United States of America)

- Vladislav Korzun (Moscow Institute of Physics and Technology, Russia)

- Nikhil Krishnaswamy (Colorado State University, United States of America)

- Rachel McDonnell (Trinity College Dublin, Ireland)

- Tan Viet Tuyen Nguyen (King's College London, England)

- Kunkun Pang (Guangdong Institute of Intelligent Manufacturing, China)

- Catherine Pelachaud (Sorbonne University, France)

- Tiago Ribeiro (Soul Machines, New Zealand)

- Carolyn Saund (University of Glasgow, Scotland)

- Hiroshi Shimodaira (University of Edinburgh, Scotland)

- Jonathan Windle (University of East Anglia, England)

- Bowen Wu (Osaka University, Japan)