GENEA Workshop 2021

Official workshop of ICMI 2021 – October 22

The GENEA (Generation and Evaluation of Non-verbal Behaviour for Embodied Agents) Workshop

2021 aims at bringing together researchers that use different methods for non-verbal-behaviour generation

and evaluation, and hopes to stimulate the discussions on how to improve both the

generation methods and

the evaluation of the results. We invite all interested researchers to submit a paper related to their

work in the area and to participate in the workshop. This is the second installment of the GENEA Workshop,

for more information about the 2020 installment, please go here.

IMPORTANT

The workshop is as an official workshop of ACM

ICMI’21 and will be held virtually over Zoom on October 22nd (Zoom links are provided by the ICMI organizers via email). For information about registration for the workshop please go to the official ICMI registration page. Please note that at least one of the paper authors needs to be registered to "cover" the submitted papers.

Please follow this link for GENEA Workshop 2022.

Important dates

Timeline for regular workshop submissions:

Workshop programme

Workshop programme:

- 08:50 Wu et al. Probabilistic Human-like Gesture Synthesis from Speech using GRU-based WGAN

- 09:05 Schneeberger et al. Influence of Movement Energy and Affect Priming on the Perception of Virtual Characters Extroversion and Mood

- 09:20 Lee et al. Crossmodal clustered contrastive learning: Grounding of spoken language to gesture

Call for papers

Overview

Generating nonverbal behaviours, such as gesticulation, facial expressions and gaze, is of great importance for natural interaction with embodied agents such as virtual agents and social robots. At present, behaviour generation is typically powered by rule-based systems, data-driven approaches, and their hybrids. For evaluation, both objective and subjective methods exist, but their application and validity are frequently a point of contention.This workshop asks “What will be the behaviour-generation methods of the future? And how can we evaluate these methods using meaningful objective and subjective metrics?” The aim of the workshop is to bring together researchers working on the generation and evaluation of nonverbal behaviours for embodied agents to discuss the future of this field. To kickstart these discussions, we invite all interested researchers to submit a paper for presentation at the workshop.

GENEA 2021 is the second GENEA workshop and an official workshop of ACM ICMI’21, which will take place either in Montreal, Canada, or online. Accepted submissions will be included in the adjunct ACM ICMI proceedings.

Paper topics include (but are not limited to) the following

- Automated synthesis of facial expressions, gestures, and gaze movements

- Audio- and music-driven nonverbal behaviour synthesis

- Closed-loop nonverbal behaviour generation (from perception to action)

- Nonverbal behaviour synthesis in two-party and group interactions

- Emotion-driven and stylistic nonverbal behaviour synthesis

- New datasets related to nonverbal behaviour

- Believable nonverbal behaviour synthesis using motion-capture and 4D scan data

- Multi-modal nonverbal behaviour synthesis

- Interactive/autonomous nonverbal behavior generation

- Subjective and objective evaluation methods for nonverbal behaviour synthesis

- Guidelines for nonverbal behaviours in human-agent interaction

Submission guidelines

The reviewing will be double blind, so submissions should be anonymous: do not include the authors' names, affiliations or any clearly identifiable information in the paper (including in the Acknowledgments and references). It is appropriate to cite past work of the authors if these citations are treated like any other (e.g., "Smith [5] approached this problem by....") - omit references only if it would be obviously identifying the authors. Paper chairs will desk reject non-anonymous papers after reviewing begins.Submitted papers should conform to the latest ACM publication format. All authors should submit manuscripts for review in a double column format to ensure adherence to page limits. Please note that a non-anonymous author block may require a larger space than the anonymized version. For LaTeX templates and examples, please click on the following link: https://www.acm.org/publications/taps/word-template-workflow, download the zip package entitled Primary Article Template - LaTeX, and use the sample-sigconf.tex template with \documentclass[sigconf,review]{acmart} to add line numbers. We suggest you to use the LaTeX templates. You will find Word templates and examples on the same webpage mentioned. Authors who do decide to use the Word template should be made aware that an extra validation step may be required during the camera-ready process.

We will accept long (8 pages) and short (4 pages) paper submissions, along with posters (3 page papers), all in the double-column ACM conference format. Pages containing only references do not count toward the page limit for any of the paper types. Submissions should be made in PDF format through OpenReview.

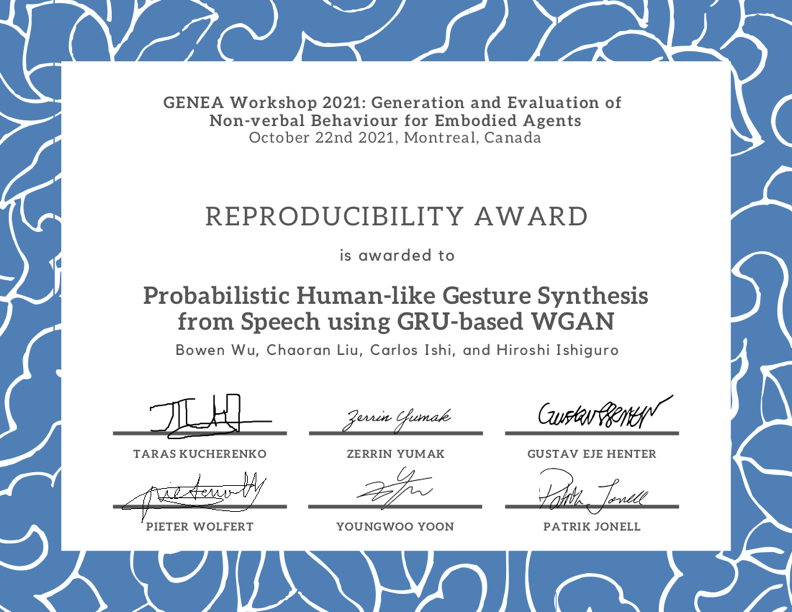

To encourage authors to make their work reproducible and reward the effort that this requires, we have introduced the GENEA Workshop Reproducibility Award.

Reproducibility Award

Reproducibility is a cornerstone of the scientific method. Lack of reproducibility is a serious issue in contemporary research which we want to address at our workshop. To encourage authors to make their papers reproducible, and to reward the effort that reproducibility requires, we are introducing the GENEA Workshop Reproducibility Award. All short and long papers presented at the GENEA Workshop will be eligible for this award. Please note that it is the camera-ready version of the paper which will be evaluated for the reward.The award is awarded to the paper with the greatest degree of reproducibility. The assessment criteria include:

- ease of reproduction (ideal: just works, if there is code - it is well documented and we can run it)

- extent (ideal: all results can be verified)

- data accessibility (ideal: all data used is publicly available)

by Bowen Wu, Chaoran Liu, Carlos Ishi, and Hiroshi Ishiguro.

Invited speakers

Hatice Gunes (University of Cambridge)

Biography

Hatice Gunes (Senior Member, IEEE) is a Professor with the Department of Computer Science and Technology, University of Cambridge, UK, leading the Affective Intelligence and Robotics (AFAR) Lab. Her expertise is in the areas of affective computing and social signal processing cross-fertilizing research in multimodal interaction, computer vision, signal processing, machine learning and social robotics. Dr Gunes’ team has published over 120 papers in these areas (citations > 5,700) and has received various awards and competitive grants, with funding from the Engineering and Physical Sciences Research Council UK (EPSRC), Innovate UK, British Council, and EU Horizon 2020. Dr Gunes is the former President of the Association for the Advancement of Affective Computing (2017-2019), the General Co-Chair of ACII 2019, and the Program Co-Chair of ACM/IEEE HRI 2020 and IEEE FG 2017. She was the Chair of the Steering Board of IEEE Transactions on Affective Computing in the period of 2017-2019. She has also served as an Associate Editor for IEEE Transactions on Affective Computing, IEEE Transactions on Multimedia, and Image and Vision Computing Journal. In 2019, Dr Gunes has been awarded the prestigious EPSRC Fellowship to investigate adaptive robotic emotional intelligence for wellbeing (2019-2024) and has been named a Faculty Fellow of the Alan Turing Institute – UK’s national centre for data science and artificial intelligence.Talk - Data-driven Robot Social Intelligence

Designing artificially intelligent systems and interfaces with socio-emotional skills is a challenging task. Progress in industry and developments in academia provide us a positive outlook, however, the artificial social and emotional intelligence of the current technology is still limited. My lab’s research has been pushing the state of the art in a wide spectrum of research topics in this area, including the design and creation of new datasets; novel feature representations and learning algorithms for sensing and understanding human nonverbal behaviours in solo, dyadic and group settings; and the data-driven generation of socially appropriate agent behaviours. In this talk, I will present some of my research team’s explorations in these areas, including audio-driven robot upper-body motion synthesis, and a dataset and a continual learning approach for assessing social appropriateness of robot actions.Louis-Philippe Morency (Carnegie Mellon University)

Biography

Louis-Philippe Morency is Associate Professor in the Language Technology Institute at Carnegie Mellon University where he leads the Multimodal Communication and Machine Learning Laboratory (MultiComp Lab). He was formerly research faculty in the Computer Sciences Department at University of Southern California and received his Ph.D. degree from MIT Computer Science and Artificial Intelligence Laboratory. His research focuses on building the computational foundations to enable computers with the abilities to analyze, recognize and predict subtle human communicative behaviors during social interactions. He received diverse awards including AI’s 10 to Watch by IEEE Intelligent Systems, NetExplo Award in partnership with UNESCO and 10 best paper awards at IEEE and ACM conferences. His research was covered by media outlets such as Wall Street Journal, The Economist and NPR.Talk - Multimodal AI: Learning Nonverbal Signatures

Human face-to-face communication is a little like a dance, in that participants continuously adjust their behaviors based on verbal and nonverbal cues from the social context. Today's computers and interactive devices are still lacking many of these human-like abilities to hold fluid and natural interactions. One such capability is to generate natural-looking gestures when animating a virtual avatar or robot. In this talk, I will present some of our recent work towards learning nonverbal signatures of human speakers. Central to this research problem is the technical challenge of grounding, which links in this case spoken language with generated nonverbal gestures. This is one key step towards our longer-term vision of Multimodal AI, a family of technologies able to analyze, recognize and generate human subtle communicative behaviors in social context.Accepted papers

Probabilistic Human-like Gesture Synthesis from Speech using GRU-based WGAN

Bowen Wu, Chaoran Liu, Carlos Ishi, and Hiroshi IshiguroGestures are crucial for increasing the human-likeness of agents and robots to achieve smoother interactions with humans. The realization of an effective system to model human gestures, which are matched with the speech utterances, is necessary to be embedded in these agents. In this work, we propose a GRU-based autoregressive generation model for gesture generation, which is trained with a CNN-based discriminator in an adversarial manner using a WGAN-based learning algorithm. The model is trained to output the rotation angles of the joints in the upper body, and implemented to animate a CG avatar. The motions synthesized by the proposed system are evaluated via an objective measure and a subjective experiment, showing that the proposed model outperforms a baseline model which is trained by a state-of-the-art GAN-based algorithm, using the same dataset. This result reveals that it is essential to develop a stable and robust learning algorithm for training gesture generation models. Our code can be found in https://github.com/wubowen416/gesture-generation.

Influence of Movement Energy and Affect Priming on the Perception of Virtual Characters Extroversion and Mood

Tanja Schneeberger, Fatima Ayman Aly, Daksitha Withanage Don, Katharina Gies, Zita Zeimer, Fabrizio Nunnari, and Patrick GebhardMovement Energy – physical activeness in performing actions and Affect Priming – prior exposure to information about someone’s mood and personality might be two crucial factors that influence how we perceive someone. It is unclear if these factors influence the perception of virtual characters in a way that is similar to what is observed during in-person interactions. This paper presents different configurations of Movement Energy for virtual characters and two studies about how these influence the perception of the characters’ personality, extroversion in particular, and mood. Moreover, the studies investigate how Affect Priming (Personality and Mood), as one form of contextual priming, influences this perception. The results indicate that characters with high Movement Energy are perceived as more extrovert and in a better mood, which corroborates existing research. Moreover, the results indicate that Personality and Mood Priming influence perception in different ways. Characters that were primed as being in a positive mood are perceived as more extrovert, whereas characters that were primed as being introverted are perceived in a more positive mood.

Crossmodal clustered contrastive learning: Grounding of spoken language to gesture

Dong Won Lee, Chaitanya Ahuja, and Louis-Philippe MorencyCrossmodal grounding is a key challenge for the task of generating relevant and well-timed gestures from just spoken language as an input. Often, the same gesture can be accompanied by semantically different spoken language phrases which makes crossmodal grounding especially challenging. For example, a deictic gesture of spanning a region could co-occur with semantically different phrases "entire bottom row" (referring to a physical point) and "molecules expand and decay" (referring to a scientific phenomena). In this paper, we introduce a self-supervised approach to learn such many-to-one grounding relationships between spoken language and gestures. As part of this approach, we propose a new contrastive loss function, Crossmodal Cluster NCE , that guides the model to learn spoken language representations which are consistent with the similarities in the gesture space. By doing so, we impose a greater level of grounding between spoken language and gestures in the model. We demonstrate the effectiveness of our approach on a publicly available dataset through quantitative and qualitative studies. Our proposed methodology significantly outperforms prior approaches for grounding gestures to language. Link to code: https://github.com/dondongwon/CC_NCE_GENEA.

Organising committee

The main contact address of the workshop is: genea-contact@googlegroups.com.

Workshop organisers

Program committee

- Chaitanya Ahuja (Carnegie Mellon University, United States of America)

- Sean Andrist (Microsoft Research, United States of America)

- Jonas Beskow (KTH Royal Institute of Technology, Sweden)

- Carlos Busso (University of Texas at Dallas, United States of America)

- Oya Celiktutan (King’s College London, United Kingdom)

- Ylva Ferstl (Trinity College Dublin, Ireland)

- Carlos Ishi (Advanced Telecommunications Research Institute International, Japan)

- Minsu Jang (Electronics and Telecommunications Research Institute, South Korea)

- James Kennedy (Disney Research, United States of America)

- Stefan Kopp (Bielefeld University, Germany)

- Zofia Malisz (KTH Royal Institute of Technology, Sweden)

- Rachel McDonnell (Trinity College Dublin, Ireland)

- Michael Neff (University of California, Davis, United States of America)

- Catherine Pelachaud (Sorbonne University, France)

- Wim Pouw (Radboud University, the Netherlands)

- Tiago Ribeiro (Soul Machines, New Zealand)